Friday, January 24, 2025

Still Using Comparison Test for Your Product Testing? A Comprehensive Guide

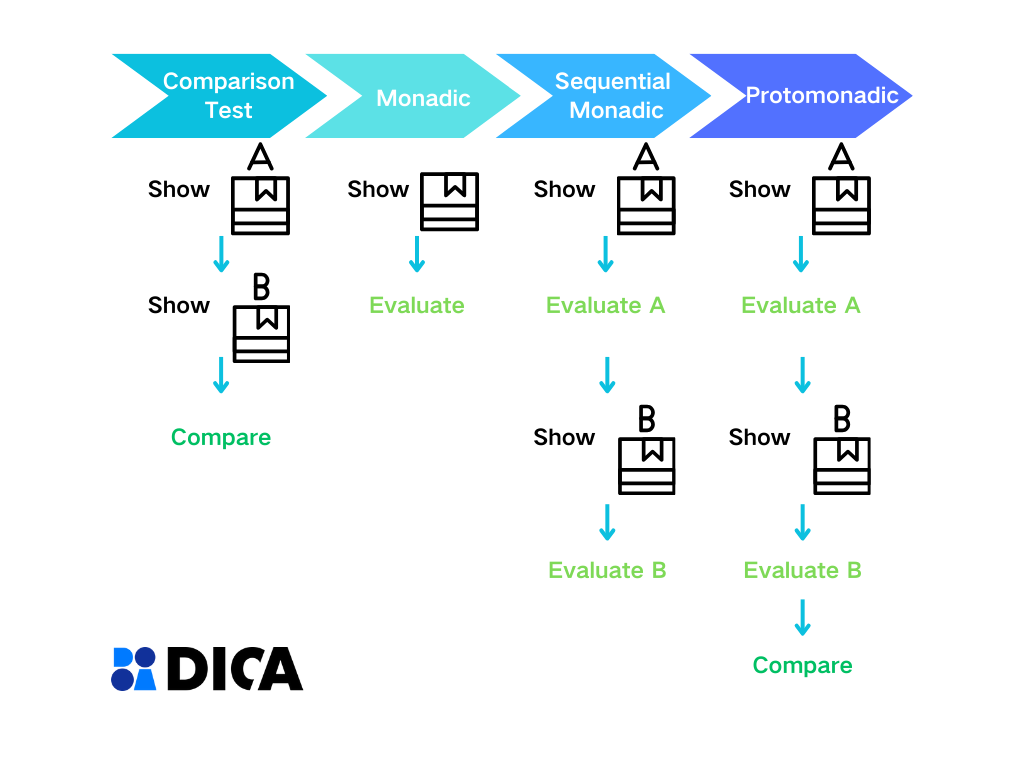

When it comes to evaluating product concepts, features, or marketing strategies, selecting the right testing method can be the key to actionable insights. Comparison Test, Monadic, Sequential Monadic, and Protomonadic are popular approaches, each with distinct advantages and limitations. This article explains these methods, compares their strengths, and guides you to choose the most suitable approach for your needs.

The Four Methods at a Glance

1. Comparison Test

A direct evaluation method where respondents compare multiple concepts and select the "best" based on their preferences. It’s a high-level tool often used in the concept validation stage.

-

How It Works: Present all test concepts (e.g., designs, features, ads) to respondents simultaneously and ask them to choose the one they prefer most. Questions can range from "Which design grabs your attention?" to "Which ad conveys trustworthiness better?"

-

Best Practices: To avoid cognitive overload, limit the number of concepts to 5 or fewer. If testing elements with varying levels of importance (e.g., colors vs. text), consider weighting responses during analysis. This method pairs well with Conjoint Analysis for deeper segmentation.

-

Pros:

-

Simple, fast, and intuitive.

-

Provides immediate direction for A/B testing refinements.

-

-

Cons:

-

Lacks granularity in understanding why one concept outperforms others. Simple comparisons can make it difficult to determine which details will appeal to respondents, ultimately leading to incorrect decisions or missing key selling points.

-

Respondents may face decision fatigue with too many options. In the initial phases of product development, testing numerous product concepts—potentially in the dozens—is often unfeasible, as presenting them all for respondents to select from can be overwhelming.

-

The risk of recency bias increases with a higher number of concepts and extended time gaps between them, as respondents may struggle to recall earlier concepts clearly, causing later ones to stand out more prominently.

-

-

Industry Insight: Comparison Tests shine in rapid iteration cycles when speed-to-insight is crucial. For instance, brands often use this during pre-launch branding exercises to refine logo options (Logo Testing Guide).

2. Monadic Testing

This is the gold standard for in-depth analysis, where respondents evaluate a single concept in isolation. The idea is to eliminate noise and distractions, allowing for unbiased feedback.

-

How It Works: Divide respondents into equally sized groups, and assign each group a single concept. For example, if testing 3 product designs, Group A reviews Design 1, Group B reviews Design 2, and so on.

-

Best Practices: Combine qualitative (e.g., Focus Groups) and quantitative questions. Use tools like Likert Scales to gauge emotional responses while capturing specific feedback with Open-Ended Questions. If testing brand-new concepts, supplement this with qualitative interviews to identify user perceptions.

-

Pros:

-

Highly detailed feedback with minimal respondent bias.

-

Ideal for understanding the "why" behind preferences.

-

Effectively avoid survey fatigue, especially from online surveys

-

-

Cons:

-

Requires a significantly larger sample size to ensure statistical significance. To ensure representativeness, each sample subgroup must reach a specific size. Moreover, it is particularly challenging to identify respondents under identical sampling conditions for market research, especially when the target audience is highly niche.

-

Costs escalate rapidly with multiple test groups. As the number of concepts being tested grows, the required sample size expands exponentially, leading to a significant rise in research costs.

-

-

Industry Insight: Monadic Testing is the go-to for Customer Satisfaction (CSAT) studies and evaluating highly nuanced product attributes like premium finishes or usability features.

3. Sequential Monadic Testing

A streamlined alternative to Monadic Testing, this approach lets respondents evaluate multiple concepts one after another. It avoids the disadvantage of Monadic that the sample size is too large, and is very suitable for questionnaire surveys with limited budgets/samples. By exposing each respondent to more than one concept, it reduces sample size requirements while retaining useful insights.

-

How It Works: Respondents review a randomized sequence of concepts. For instance, if testing three concepts, some participants see Concept A first, followed by B and C, while others see the concepts in a different order.

-

Best Practices: To combat order effects like primacy or recency bias, ensure proper randomization. Use automation tools or survey platforms with built-in algorithms for randomization and balanced exposure.

-

Pros:

-

Reduces sample size and cost.

-

Offers a middle ground between depth and efficiency.

-

-

Cons:

-

Longer surveys increase respondent fatigue.

-

Feedback quality may diminish for concepts shown later in the sequence.

-

-

Industry Insight: Sequential Monadic Testing is particularly effective for feature prioritization exercises where iterative feedback drives product roadmaps (Predictive Analytics).

4. Protomonadic Testing

In Sequential Monadic testing, while the number of concepts assessed at a time is relatively small—often as few as two—the order in which they are presented can subtly influence respondents. To address this, a comparison test can follow the Sequential Monadic phase, asking participants to select their preferred option from the previously evaluated concepts.

As a result, Protomanodic Testing is the hybrid method combining Sequential Monadic and Comparison Test. Respondents first evaluate each concept individually and then compare them to select a favorite. This dual-layer approach yields both detailed individual feedback and overarching preferences.

-

How It Works: First, conduct a Sequential Monadic Test to collect granular data on each concept. Then, follow up with a Comparison Test asking, "If you had to choose one, which would it be?" This method validates both detailed feedback and holistic preference.

-

Best Practices:

-

Limit concepts to 3–5 to avoid overwhelming respondents.

-

Use this method when testing new ideas alongside established benchmarks to ensure alignment with user expectations.

-

-

Pros:

-

Provides two layers of validation: detailed insights and preference rankings.

-

Controls for biases like recency or fatigue through structured design.

-

-

Cons:

-

Complex to design and analyze due to dual testing phases.

-

Slightly longer survey times, requiring respondent incentives.

-

-

Industry Insight: This method is ideal for de-risking high-stakes decisions like go-to-market strategies or new product launches.

How to Choose the Right Method

-

Define Objectives

-

Focused feedback on specific features? Go Monadic.

-

Comparative clarity across multiple options? Choose Comparison Test or Protomonadic.

-

-

Resource Assessment

-

Limited budget? Sequential Monadic optimizes costs without sacrificing quality.

-

Large-scale studies? Monadic Testing remains unmatched for depth and reliability.

-

-

Audience Segmentation

-

For niche or B2B audiences, start small with Sequential Monadic or Protomonadic to test waters.

-

For broad consumer markets, Monadic Testing ensures representative feedback.

-

Common Pitfalls and Solutions

-

Survey Fatigue Overloading respondents? Keep sessions under 15 minutes and limit tested concepts to 5 or fewer.

-

Bias Control Use advanced randomization and avoid leading questions.

-

Sample Size Issues Leverage panel services for quick access to diverse audiences.

Conclusion

Choosing the right testing method isn’t one-size-fits-all. By understanding the nuances of Comparison Test, Monadic, Sequential Monadic, and Protomonadic, you can tailor your approach to your objectives, budget, and audience. When done right, these methods unlock powerful insights, guiding data-driven decisions.

For related tools, explore Concept Testing, Paired Comparison Test, and Customer Satisfaction to further refine your product research.

Related articles